Research Interests

We live in a three-dimensional world, but each of our eyes only receives a two-dimensional image. How does our brain combine these images?

We live in a three-dimensional world, but each of our eyes only receives a two-dimensional image. How does our brain combine these images?

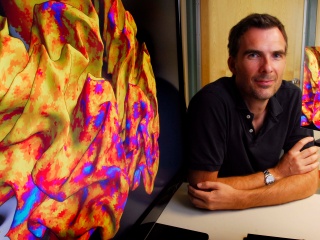

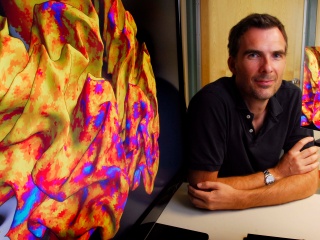

Research in our laboratory focuses on the neural mechanisms underlying visual perception, with an emphasis on motion and depth perception. Signals from the two eyes need to be combined in visual cortex in order to inform us of the position and movement of objects.

The study of motion and depth perception helps uncover the function and underlying architecture of the brain’s visual system and provides us with a model by which to understand visual processing in general. To do so we use complementary behavioral, neuroimaging and computational approaches.

Our research improves the understanding and treatment of visual impairments with a cortical basis, such as amblyopia (lazy eye) and motion blindness, ultimately leading to the recovery of lost visual function.

Altered white matter in early visual pathways of humans with amblyopia

Amblyopia (better known as lazy eye) is a disorder where vision is reduced, without a clear deficit in the eyes themselves. It occurs when the images from our two eyes are poorly correlated during development. Over time the brain tends to suppress the image from the ‘lazy’ eye, in favor of the ‘good’ eye.

Amblyopia (better known as lazy eye) is a disorder where vision is reduced, without a clear deficit in the eyes themselves. It occurs when the images from our two eyes are poorly correlated during development. Over time the brain tends to suppress the image from the ‘lazy’ eye, in favor of the ‘good’ eye.

Such suppression leads to abnormal visual experience, and as such amblyopia is of interest not only as a clinical disorder, but also as a human model of the interaction between sensory input and brain development.

In this study we asked what the neuroanatomical consequences of visual suppression are. We show that amblyopia leads to structural changes in early visual pathways, connecting the thalamus to striate and extrastriate visual cortex. This is most likely due to changes in cortico-thalmic feedback projections. Our work gives us a clearer picture of visual development and benefits the diagnosis and treatment of visual disorders such as amblyopia.

See our paper (.pdf) in Vision Research for more information.

Motion processing with two eyes in three dimensions

The movement of an object toward or away from the head is perhaps the most critical piece of information

an organism can extract from its environment. Such 3D motion produces horizontally opposite motions on the two retinae.

The movement of an object toward or away from the head is perhaps the most critical piece of information

an organism can extract from its environment. Such 3D motion produces horizontally opposite motions on the two retinae.

Canonical conceptions of primate visual processing assert that neurons early in the visual system combine monocular inputs and extract 1D (“component�) motions; later stages then extract 2D pattern motion from the output of the earlier stage. We found, however, that 3D motion perception is in fact affected by the comparison of opposite 2D pattern motions from each eye individually.

These results imply the existence

of eye-of-origin information in later stages of motion processing and therefore motivate the incorporation of such

eye-specific pattern-motion signals in models of motion processing and binocular integration.

A paper on our findings has been published in the Journal of Vision. You can also view a demonstration.

Neural circuits underlying the perception of 3D motion

The macaque middle temporal area (MT) and the human MT complex (MT+) have well-established sensitivity to both 2D motion and position in depth. Yet evidence for sensitivity to 3D motion has remained elusive.

The macaque middle temporal area (MT) and the human MT complex (MT+) have well-established sensitivity to both 2D motion and position in depth. Yet evidence for sensitivity to 3D motion has remained elusive.

We showed that human MT+

encodes two binocular cues to 3D motion, one based on estimating

changing disparities

over time, and the other based on interocular comparisons of retinal

velocities.

By varying orientation, spatiotemporal characteristics, and binocular

properties of moving

displays, we distinguished these 3D motion signals from their

constituents, instantaneous

binocular disparity and monocular retinal motion. Furthermore, an

adaptation protocol

confirmed MT+ selectivity to the direction of 3D motion.

These results demonstrate that

critical binocular signals for 3D motion processing are present in

MT+, revealing an

important and previously overlooked role for this well-studied brain area.

A paper on our findings has been published in Nature Neuroscience. You can also download the supplementary materials (.pdf) and view animations of the stimuli.

Percepts of motion through depth without percepts of position in depth

It is a fundamental challenge for the visual system of primates to accurately encode 3D motion. It is a fundamental challenge for the visual system of primates to accurately encode 3D motion.

Prior work on the perception of static depth has employed binocularly 'anti-correlated' random dot displays, in which corresponding dots have opposite contrast polarity in the two eyes: a black dot in one eye is paired with a white dot in the other eye. Such displays have been shown to yield weak, distorted, or nonexistent percepts of position in depth.

We showed that the perception of motion through depth is not impaired by binocular anticorrelation. Although subjects were not able to accurately judge the depths of these displays, they were surprisingly able to perceive the direction of motion through depth.

A paper on our findings has been published in the Journal of Vision. A demonstration accompanies the paper.

The stereokinetic effect

An ellipse rotating in the image plane can produce the 3D percept of a rotating rigid circular disk. In theory, the motion of the 3D percept cannot be reliably inferred based on the 2D stimulus. An ellipse rotating in the image plane can produce the 3D percept of a rotating rigid circular disk. In theory, the motion of the 3D percept cannot be reliably inferred based on the 2D stimulus.

However, when we quantitatively estimated the perceived 3D motion, we found that it was nearly identical across observers, suggesting that all observers had the same 3D percept.

We assumed that given the 2D stimulus the visual system generates a rigid 3D percept that is as slow and smooth as possible. The percepts predicted by these assumptions closely matched the experimental data, suggesting that the visual system resolves perceptual ambiguity in such stimuli using slow and smooth motion assumptions.

A paper on our findings has been published in Vision Research. A demonstration accompanies the paper.

|

We live in a three-dimensional world, but each of our eyes only receives a two-dimensional image. How does our brain combine these images?

We live in a three-dimensional world, but each of our eyes only receives a two-dimensional image. How does our brain combine these images?

Amblyopia (better known as lazy eye) is a disorder where vision is reduced, without a clear deficit in the eyes themselves. It occurs when the images from our two eyes are poorly correlated during development. Over time the brain tends to suppress the image from the ‘lazy’ eye, in favor of the ‘good’ eye.

Amblyopia (better known as lazy eye) is a disorder where vision is reduced, without a clear deficit in the eyes themselves. It occurs when the images from our two eyes are poorly correlated during development. Over time the brain tends to suppress the image from the ‘lazy’ eye, in favor of the ‘good’ eye.

The macaque middle temporal area (MT) and the human MT complex (MT+) have well-established sensitivity to both 2D motion and position in depth. Yet evidence for sensitivity to 3D motion has remained elusive.

The macaque middle temporal area (MT) and the human MT complex (MT+) have well-established sensitivity to both 2D motion and position in depth. Yet evidence for sensitivity to 3D motion has remained elusive.

It is a fundamental challenge for the visual system of primates to accurately encode 3D motion.

It is a fundamental challenge for the visual system of primates to accurately encode 3D motion.  An ellipse rotating in the image plane can produce the 3D percept of a rotating rigid circular disk. In theory, the motion of the 3D percept cannot be reliably inferred based on the 2D stimulus.

An ellipse rotating in the image plane can produce the 3D percept of a rotating rigid circular disk. In theory, the motion of the 3D percept cannot be reliably inferred based on the 2D stimulus.